Enterprise Generative AI Adoption Failures: Causes, Case Studies, and Strategies for Success

Enterprises are investing billions in generative AI, but 95% of pilots fail to scale or drive impact. This report explores why adoption stalls, the pitfalls companies face, and strategies to turn GenAI pilots into measurable business value.

Introduction: The Promise vs. Reality of Generative AI in Business

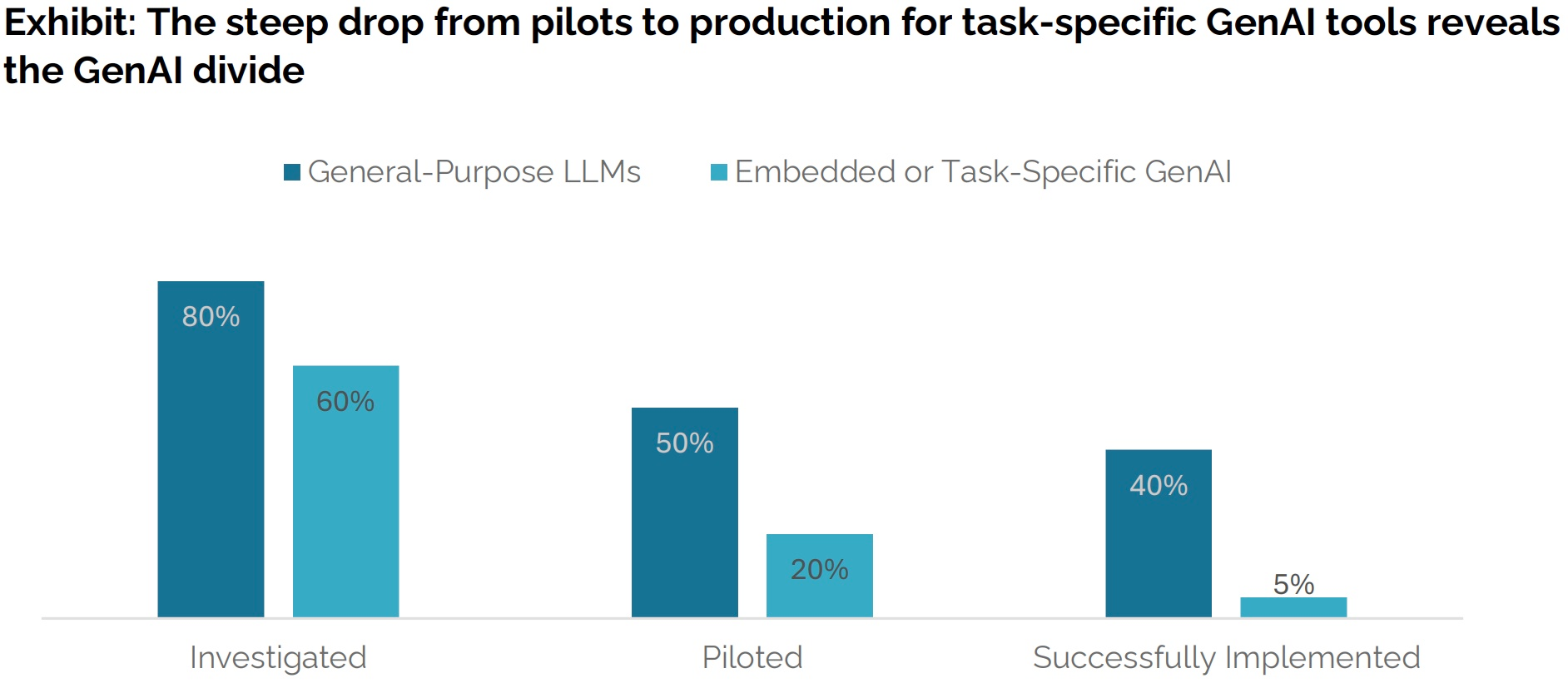

Generative AI has been heralded as a game-changer for enterprises, sparking a rush of investment and pilot projects. Yet recent research reveals a stark “GenAI divide” between hype and actual impact. A 2025 MIT study found U.S. companies poured $35–40 billion into generative AI initiatives, but only ~5% of these AI pilots delivered rapid revenue gains or measurable business value – the vast majority yielded little to no P&L impact. In other words, roughly 95% of enterprise genAI projects never progress beyond experiments or proofs of concept. The problem isn’t that generative models lack capability; rather, most corporate AI efforts stall before they’re integrated deeply enough to transform operations. This report examines why so many enterprise genAI initiatives fail to implement successfully, highlighting real-world examples across industries and expert analyses of the pitfalls. It also outlines key strategies organizations can adopt to avoid these common failures and turn promising pilots into tangible outcomes.

Figure: Despite high interest in generative AI, very few custom enterprise AI pilots make it from proof-of-concept to production deployment (only ~5%), illustrating a steep drop-off from initial experiment to scalable solution. Most efforts remain stuck in pilot stage, creating a “GenAI divide” between the handful of successes and the bulk of stalled projects.

Case Studies of Generative AI Adoption Failures

Real-world examples across different sectors underscore the challenges enterprises face in implementing generative AI at scale. The following cases highlight common failure modes and lessons learned:

| Case (Industry) | GenAI Initiative | What Went Wrong | Outcome & Lesson |

|---|---|---|---|

| CNET / Red Ventures (Media) | AI-written personal finance articles | Factual errors and plagiarized passages due to lack of accuracy and oversight. Over 50% of articles required corrections. | The project was paused. Highlighted reputational risk of deploying GenAI without rigorous human fact-checking. |

| IBM Watson for Oncology (Healthcare) | AI system for cancer treatment recommendations | Overhyped capabilities, insufficient high-quality data, and unsafe/incorrect treatment advice. Not adapted to real clinical workflows. | Despite ~$4B investment, Watson Health failed. Lost trust of physicians and clients. Showed the danger of marketing AI before it’s clinically proven. |

| Samsung (Electronics) | Employee use of ChatGPT for coding assistance and notes | Data governance failure: employees entered confidential source code and meeting notes into ChatGPT. Risk of proprietary info leaking externally. | Sensitive business details exposed. Samsung banned or restricted external GenAI use until safeguards were in place. Emphasized importance of AI usage policies and IP protection. |

These cases reflect broader patterns. In media and content generation, lack of accuracy and human editorial oversight can turn AI into a liability (as seen with CNET). In highly regulated or knowledge-intensive fields like healthcare or law, generative AI that isn’t deeply vetted can produce “hallucinations” – outputs that seem convincing but are factually baseless – with serious consequences. (For example, multiple lawyers have faced sanctions for submitting ChatGPT-generated legal briefs containing fake case citations, highlighting how blind trust in generative AI can backfire in enterprise settings). Meanwhile, in corporate environments handling sensitive data (tech, finance, etc.), misuse of AI tools without governance can lead to data breaches or compliance violations, as the Samsung scenario demonstrates. Each failure has different surface causes, but they point to a set of recurring underlying issues explored in the next section.

Why 95% of Enterprise GenAI Projects Fail: Common Pitfalls

Enterprise attempts to implement generative AI often falter due to a combination of organizational, technical, and strategic challenges.

Key reasons for the high failure rate include:

- Lack of Integration into Business Workflows: Many pilots remain isolated “science projects” that never merge with core processes. The MIT NANDA report concluded the primary barrier isn’t the AI models themselves, but poor integration and alignment with real workflows. AI solutions are frequently built as demos or bolt-ons that don’t fit daily operations, so employees simply don’t adopt them. A manufacturing COO candidly noted, “the hype...says everything has changed, but in our operations, nothing fundamental has shifted” – aside from minor efficiency gains in isolated tasks. In short, if generative AI tools don’t mesh with how work is actually done (or require too many manual workarounds), they stall out before delivering value.

- “Learning Gap” – No Continuous Improvement: Unlike humans, most current genAI deployments don’t learn from ongoing use. They lack memory of prior interactions and fail to adapt to user feedback. This brittleness leads users to abandon AI tools for high-stakes work. As MIT researchers put it, today’s typical generative AI “forgets context, doesn’t learn, and can’t evolve,” making it ill-suited for complex, long-running tasks. For example, employees might try a generative chatbot for drafting a report, but if it can’t retain client-specific instructions or past corrections, it will repeat mistakes and erode trust. This learning gap leaves many pilots stuck in perpetual trial mode without improvement, undermining broader adoption.

- Unclear ROI and Misaligned Use Cases: Fuzzy return on investment (ROI) is a major hurdle. Enterprises often jump on the genAI bandwagon without a clear business case, leading CFOs to question the value. Fewer than 5% of organizations have moved beyond small-scale GenAI experiments to widespread use, in part because many early projects can’t quantify tangible benefits. A common misstep is focusing on flashy customer-facing applications (e.g. chatbots for marketing) that generate buzz but minimal bottom-line impact. Studies show companies poured the bulk of their AI budgets into sales and marketing use cases, yet back-office automation (like document processing, customer service ops, HR workflows) delivered higher returns through cost savings and efficiency gains. In other words, many enterprises chased “visibility over value”, investing in demos or AI features that impress in theory or on social media, rather than targeting the unglamorous processes where AI could actually save millions. When those high-profile pilots don’t move the needle financially, momentum fizzles out.

- Data Quality and Hallucinations: Generative AI is only as good as the data and training it builds on, and many enterprise applications face a data mismatch. Models like GPT-4 are trained on vast internet text, much of which is irrelevant or too general for niche business tasks. One analysis found less than 1% of the training data in general LLMs is relevant to specific enterprise domains. The result is unreliable outputs – e.g. in one tax domain benchmark, GPT-4 answered only ~54% of questions correctly (barely above random chance). Domain-specific models can fare even worse if not properly trained (Cohere’s model scored only 22% on the same tax test). This lack of accuracy, combined with the propensity of AI to confidently fabricate information (“hallucinate”), leads to serious errors if outputs aren’t rigorously checked. In the CNET case, for instance, the AI tool produced numerous calculation errors in finance articles and even made up some facts, requiring embarrassing corrections. Poor data quality (e.g. outdated or biased training sets) further exacerbates the problem, as does the challenge of integrating an AI system with disparate enterprise data sources (often locked in legacy systems). Without cleaning and curating data – and constraining the AI with factual references – many pilots yield unreliable results that stakeholders cannot trust.

- High Costs and Technical Complexity: Moving generative AI from pilot to production can be expensive and technically demanding, causing many projects to stall. Large language models require significant compute power; if companies rely on third-party API access, usage costs can skyrocket as they scale up usage. According to venture benchmarks, only about 2% of GenAI proof-of-concepts successfully transition to production – often because organizations realize the cost and effort to operationalize the POC far exceed initial expectations. AI startups have reported spending as much as 80% of their budgets on AI infrastructure (GPU hardware, cloud compute, etc.) to support such models. Enterprises face a similar issue: use of vendor APIs can accelerate time-to-value, but at the cost of high ongoing fees, while hosting models internally demands specialized infrastructure and talent. Either way, soaring costs without immediate ROI make it hard to sustain projects. As an example, one industry survey found that over 50% of large companies had already suffered major financial losses from AI initiatives due to poor management and governance. Notably, 10% of companies reported losses over $200 million from AI project failures (e.g. cost overruns, unforeseen errors). Such costly setbacks make enterprise leaders more cautious to push pilots into full deployment.

- Workforce Resistance and Change Management Issues: Introducing AI into established workflows often meets human resistance. Employees may fear that AI will replace jobs or simply find it cumbersome to use unfamiliar tools. In the MIT study’s survey of enterprise users, the number one barrier to scaling AI pilots was “unwillingness to adopt new tools” on the part of employees. Even when workers experiment with AI (often out of curiosity), getting widespread, sustained usage is another matter. Lack of proper training and UX design can make AI tools intimidating or frustrating, leading staff to quietly revert to old methods. Change management is frequently underestimated – many projects see an initial drop in productivity when AI tools are first introduced, as teams climb the learning curve. Without executive support and patience to get past that dip, the initiative may be deemed a failure prematurely. Additionally, if leadership and middle management are not aligned, or if no one communicates a clear vision of how the AI will help employees (rather than replace them), adoption will languish. This dynamic was seen in several Deloitte case studies, where employee unfamiliarity and skill gaps slowed GenAI project timelines until extra training and communication were provided. In short, cultural and change-management factors can make or break an AI deployment.

- Trust, Privacy, and Compliance Concerns: Finally, many enterprise AI efforts falter over trust and risk issues. Generative AI’s tendency to sometimes produce inappropriate or biased content, along with uncertainties about data security, give CIOs and risk managers pause. Over half of people surveyed in 2023 reported feeling more concerned than excited about AI’s growing role, and this skepticism extends to corporate environments. If users and stakeholders do not trust the AI’s outputs, they simply won’t use it for mission-critical decisions. Furthermore, industries with strict regulations (finance, healthcare, government) have legitimate worries about compliance: for example, ensuring an AI’s decisions can be explained and audited, or preventing exposure of personal/customer data. Many large firms are reluctant to send sensitive data to external AI services due to privacy fears. The Samsung incident above is a vivid example – even absent malicious intent, using a public AI tool on confidential data created an unacceptable leakage risk. Regulators have started to step in as well (e.g. Italy’s temporary ban on ChatGPT over privacy concerns), adding more uncertainty. All these factors – if not addressed – undermine user and management trust, often causing AI initiatives to be shelved “for now” due to legal/compliance veto or reputational risk. In essence, a generative AI project that isn’t responsibly governed will likely either never get approval to go live, or will implode at the first serious mistake or security scare.

Figure: Top five barriers that enterprise teams identified as hindering generative AI pilots from scaling. Organizational and human factors dominate – resistance to new tools was the highest-rated obstacle, followed by concerns about output quality (accuracy/hallucinations) and poor user experience. Lack of executive sponsorship and difficult change management were also significant barriers. This suggests that even when the technology is viable, getting people, processes, and leadership aligned is the bigger challenge.

Taken together, these pitfalls explain why so many generative AI efforts never graduate from sandbox to solution. In most cases, it’s not that the underlying AI models can’t do anything useful – it’s that a host of supporting factors (from data readiness and cost management to user buy-in and workflow integration) are lacking. The minority of organizations that do succeed with enterprise AI tend to systematically overcome these challenges. The next section highlights what those successful adopters do differently, offering a guide to strategies for avoiding the common pitfalls.

Strategies to Achieve Successful GenAI Adoption

Despite the high failure rate, a growing body of expertise and best practices can help enterprises tilt the odds in favor of success. Organizations that have bridged the “GenAI divide” generally take a more disciplined, value-driven approach to adoption. Below, we outline key strategies – drawn from research reports and industry experts – to avoid common generative AI implementation pitfalls and to realize tangible benefits. These strategies are summarized in the table, followed by further explanation:

| Common Pitfall | Strategy to Overcome It |

|---|---|

| No clear ROI or business case | Start small, with focused pilots and measurable KPIs. Rather than betting big upfront, implement GenAI incrementally through pilot projects and controlled trials. Choose a specific use case and define success (e.g., 20% reduction in processing time, or $X cost savings), then rigorously A/B test versus the status quo. This demonstrates tangible ROI on a small scale, delivers quick wins, and builds buy-in from stakeholders. |

| Misalignment with workflows | Integrate AI into existing processes instead of forcing users to change habits. Embed AI features into current systems (CRM, ERP, helpdesk) so it augments existing work. Involve end users, domain experts, and IT in design/testing. Target narrow, high-value workflows where AI can deliver a 10× improvement. Fit the AI to real user needs and scale gradually to boost adoption. |

| Data quality issues & hallucinations | Invest in data preparation and domain-specific knowledge integration. Establish strong data governance and curate high-quality datasets. Use techniques like Retrieval-Augmented Generation (RAG) to ground outputs in trusted sources. Connect AI to company databases or knowledge bases to reduce hallucinations. Keep humans in the loop with expert reviews. Treat AI output as a draft, not final truth. |

| High implementation costs | Optimize for cost-efficiency and scalability from day one. Start with smaller, domain-tuned or open-source models rather than expensive foundation models. Use prompt optimization and caching to reduce costs. As usage grows, consider in-house infrastructure (GPUs) for stable, high utilization. Continuously monitor AI FinOps to track and reduce per-output costs. |

| User resistance and low trust | Focus on user-centric design, training, and transparency. Train employees not just on how, but why AI is being adopted. Empower “AI champions” in teams to mentor peers. Build trust through explainability, ethical guardrails, and transparency about limitations. Position AI as a partner that supports work rather than a threat. |

| Going it alone (DIY syndrome) | Leverage external expertise and solutions where appropriate. Vendor tools have higher success rates than in-house builds. Partner with vendors or consultants, then customize solutions to fit business needs. Internal AI skills should focus on integration and proprietary tweaks, not reinventing core algorithms. In regulated sectors, evaluate vetted platforms instead of building from scratch. |

| Lack of executive sponsorship | Treat GenAI as a strategic enterprise initiative with C-suite leadership. Align AI adoption with company vision and set realistic timelines (12–18+ months). Allocate sufficient budget for training, redesign, and iteration. Create an AI center of excellence or steering committee. Strong executive support signals AI is a priority, encouraging persistence beyond early setbacks. |

By applying these strategies, enterprises can significantly improve their odds of success with generative AI. For example, firms that started with narrow pilots in high-impact areas (like automating a tedious insurance claims process or speeding up R&D documentation) often saw quick efficiency wins, which they then scaled across similar processes. Emphasizing back-office and operational use cases first, where AI can reliably save costs, creates momentum (and savings) that can fund more ambitious customer-facing innovations later. Additionally, organizations that empowered tech-savvy business users to lead deployments – rather than exclusively data scientists – reported faster adoption and better workflow fit, since those users understood the day-to-day context best. Many successful adopters also credit a “human-in-the-loop” approach: they did not attempt to eliminate humans from the process early on, but rather used AI to augment human work and gradually increased automation as confidence grew. This approach builds trust and allows the system to learn from human feedback, closing the “learning gap” over time.

Finally, forward-looking enterprises are already preparing for the next wave of generative AI evolution – what MIT researchers call “agentic AI” or AI that can remember, adapt, and make autonomous decisions within set bounds. While that future is emerging, the actions to be ready for it (e.g. robust data management, governance frameworks, modular AI architectures) are steps that also make current AI deployments more successful. In essence, building a strong foundation now – through good data, user trust, workflow integration, and clear ROI focus – not only helps overcome today’s high failure rate, but also positions a company to harness more powerful AI capabilities in the near future.

Conclusion

Generative AI’s transformative potential for enterprise is real, but as the evidence shows, realizing that potential requires more than just plugging a powerful model into the organization. Roughly 95% of corporate GenAI initiatives have failed to deliver significant value because of strategic and operational missteps, not fundamental flaws in the AI technology. By studying the failures – from high-profile cases like IBM Watson’s struggles in healthcare to the quieter stagnation of countless pilots that never scaled – we learn that success hinges on execution and alignment: integrating AI into the fabric of the business, focusing on use cases with clear value, ensuring data and systems are prepared, controlling costs, and bringing people along through training and governance. The encouraging news is that a growing cohort of enterprises is getting it right, bridging the gap between AI experiments and enterprise-wide impact. Their experiences and the strategies outlined above provide a roadmap for others to follow. In summary, companies that treat generative AI as a strategic, long-term operational capability – with iterative learning, proper change management, and a relentless focus on business outcomes – are far more likely to join the 5% who reap real rewards, rather than becoming another statistic in the 95% that fail to launch. With prudent planning and human-centered implementation, generative AI can move from novelty to an engine of productivity and innovation in the enterprise.

Sources: Recent reports and expert analyses were used in compiling this assessment, including MIT Media Lab’s State of AI in Business 2025, industry case studies and surveys (Deloitte 2024 GenAI study, AI Infrastructure Alliance report), news on specific company experiences (Computerworld, Fortune, Reuters, The Verge among others), and thought leadership from AI practitioners (Benhamou Global Ventures blogs). These sources are cited throughout the text with relevant details to support the findings and recommendations presented.